si-lang.htm

From Wikipedia and other sources as early as 090902.

As part of a collection on Sign Language by U Kyaw Tun (UKT) (M.S., I.P.S.T., USA) and staff of Tun Institute of Learning (TIL) . Not for sale. No copyright. Free for everyone. Prepared for students and staff of TIL Research Station, Yangon, MYANMAR : http://www.tuninst.net , www.romabama.blogspot.com

index.htm | |Top

lang-sign-indx.htm

Introduction

History of Sign language

Linguistics of Sign

Sign languages vs. oral languages

Spatial grammar and simultaneity

Classification of sign languages

Typology of sign languages

Written forms of sign languages

Telecommunications facilitated signing

Home Sign

Use of Signs in hearing communities

Gestural theory of human language origins

Primate use of sign language

Deaf communities and deaf culture

Legal recognition

A sign language (also signed language) is a language which, instead of acoustically conveyed sound patterns [sound waves using sound energy], uses visually transmitted sign patterns (manual communication, body language) to convey meaning — simultaneously combining hand shapes, orientation and movement of the hands, arms or body, and facial expressions to fluidly express a speaker's thoughts.

Wherever communities of deaf people exist, sign languages develop. Their complex spatial grammars are markedly different from the grammars of spoken languages.[1][2] Hundreds of sign languages are in use around the world and are at the cores of local Deaf cultures. Some sign languages have obtained some form of legal recognition, while others have no status at all.

One of the earliest written records of a signed language occurred in the fifth century BC, in Plato's Cratylus [UKT: see the accompanying file - crat.htm], where Socrates says: "If we hadn't a voice or a tongue, and wanted to express things to one another, wouldn't we try to make signs by moving our hands, head, and the rest of our body, just as dumb people do at present?" [3] It seems that groups of deaf people have used signed languages throughout history.

In the 2nd century Judea, the recording in the Mishnah tractate Gittin[4] stipulated that for the purpose of commercial transactions "A deaf-mute can hold a conversation by means of gestures. Ben Bathyra says that he may also do so by means of lip-motions." This teaching was well known in the Jewish society where study of Mishnah was compulsory from childhood.

In 1620, Juan Pablo Bonet published Reducción de las letras y arte para enseñar a hablar a los mudos (‘Reduction of letters and art for teaching mute people to speak’) in Madrid. It is considered the first modern treatise of Phonetics and Logopedia, setting out a method of oral education for the deaf people by means of the use of manual signs, in form of a manual alphabet to improve the communication of the mute or deaf people.

From the language of signs of Bonet, Charles-Michel de l'Épée published his manual alphabet in the 18th century, which has survived basically unchanged in France and North America until the present time.

Sign languages have often evolved around schools for deaf students. In 1755, Abbé de l'Épée founded the first school for deaf children in Paris; Laurent Clerc was arguably its most famous graduate. Clerc went to the United States with Thomas Hopkins Gallaudet to found the American School for the Deaf in Hartford, Connecticut, in 1817.[5] Gallaudet's son, Edward Miner Gallaudet founded a school for the deaf in 1857 in Washington, D.C., which in 1864 became the National Deaf-Mute College. Now called Gallaudet University, it is still the only liberal arts university for deaf people in the world.

Generally, each spoken language has a sign language counterpart in as much as each linguistic population will contain Deaf members who will generate a sign language. In much the same way that geographical or cultural forces will isolate populations and lead to the generation of different and distinct spoken languages, the same forces operate on signed languages and so they tend to maintain their identities through time in roughly the same areas of influence as the local spoken languages. This occurs even though sign languages have no relation to the spoken languages of the lands in which they arise. There are notable exceptions to this pattern, however, as some geographic regions sharing a spoken language have multiple, unrelated signed languages. Variations within a 'national' sign language can usually be correlated to the geographic location of residential schools for the deaf.

International Sign, formerly known as Gestuno, is used mainly at international Deaf events such as the Deaflympics and meetings of the World Federation of the Deaf. Recent studies claim that while International Sign is a kind of a pidgin, they conclude that it is more complex than a typical pidgin and indeed is more like a full signed language.[6]

Excerpts from two Wikipedia articles: http://en.wikipedia.org/wiki/Sign_language 101202, 090902

In linguistic terms, sign languages are as rich and complex as any oral language, despite the common misconception that they are not "real languages". Professional linguists have studied many sign languages and found them to have every linguistic component required to be classed as true languages.[4]

Sign languages are not mime -- in other words, signs are conventional, often arbitrary and do not necessarily have a visual relationship to their referent, much as most spoken language is not onomatopoeic. While iconicity is more systematic and wide-spread in sign languages than in spoken ones, the difference is not categorical. [5] Nor are they a visual rendition of an oral language. They have complex grammars of their own, and can be used to discuss any topic, from the simple and concrete to the lofty and abstract.

Sign languages, like oral languages, organize elementary, meaningless units (phonemes; once called cheremes in the case of sign languages) into meaningful semantic units. The elements of a sign are Handshape (or Handform), Orientation (or Palm Orientation), Location (or Place of Articulation), Movement, and Non-manual markers (or Facial Expression), summarised in the acronym HOLME.

Common linguistic features of deaf sign languages are extensive use of classifiers, a high degree of inflection, and a topic-comment syntax. Many unique linguistic features emerge from sign languages' ability to produce meaning in different parts of the visual field simultaneously. For example, the recipient of a signed message can read meanings carried by the hands, the facial expression and the body posture in the same moment. This is in contrast to oral languages, where the sounds that comprise words are mostly sequential (tone being an exception).

A common misconception is that sign languages are somehow dependent on oral languages, that is, that they are oral language spelled out in gesture, or that they were invented by hearing people. Hearing teachers in deaf schools, such as Thomas Hopkins Gallaudet, are often incorrectly referred to as “inventors” of sign language.

Manual alphabets (fingerspelling) are used in sign languages, mostly for proper names and technical or specialised vocabulary borrowed from spoken languages. The use of fingerspelling was once taken as evidence that sign languages were simplified versions of oral languages, but in fact it is merely one tool among many. Fingerspelling can sometimes be a source of new signs, which are called lexicalized signs.

On the whole, deaf sign languages are independent of oral languages and follow their own paths of development. For example, British Sign Language and American Sign Language are quite different and mutually unintelligible, even though the hearing people of Britain and America share the same oral language.

Similarly, countries which use a single oral language throughout may have two or more sign languages; whereas an area that contains more than one oral language might use only one sign language. South Africa, which has 11 official oral languages and a similar number of other widely used oral languages is a good example of this. It has only one sign language with two variants due to its history of having two major educational institutions for the deaf which have served different geographic areas of the country.

In 1972 Ursula Bellugi, a cognitive neuroscientist and psycholinguist, asked several people fluent in English and American Sign Language to tell a story in English, then switch to ASL or vice versa. The results showed an average of 4.7 words per second and 2.3 signs per second. However, only 122 signs were needed for a story, whereas 210 words were needed; thus the two versions of the story took almost the same time to finish. Ursula then tested to see if ASL omitted any crucial information. A bilingual person was given a story to translate into ASL. A second bilingual signer who could only see the signs then translated them back into English: the information conveyed in the signed story was identical to the original story. This study, although limited in scope, suggests that ASL signs have more information than spoken English: 1.5 propositions per second compared to 1.3 for spoken English.[9]

Sign languages exploit the unique features of the visual medium (sight). Oral language is linear. Only one sound can be made or received at a time. Sign language, on the other hand, is visual; hence a whole scene can be taken in at once. Information can be loaded into several channels and expressed simultaneously. As an illustration, in English one could utter the phrase, "I drove here". To add information about the drive, one would have to make a longer phrase or even add a second, such as, "I drove here along a winding road," or "I drove here. It was a nice drive." However, in American Sign Language, information about the shape of the road or the pleasing nature of the drive can be conveyed simultaneously with the verb 'drive' by inflecting the motion of the hand, or by taking advantage of non-manual signals such as body posture and facial expression, at the same time that the verb 'drive' is being signed. Therefore, whereas in English the phrase "I drove here and it was very pleasant" is longer than "I drove here," in American Sign Language the two may be the same length.

In fact, in terms of syntax, ASL shares more with spoken Japanese than it does with English.[6]

Although deaf sign languages have emerged naturally in deaf communities alongside or among spoken languages, they are unrelated to spoken languages and have different grammatical structures at their core. A group of sign "languages" known as manually coded languages are more properly understood as signed modes of spoken languages, and therefore belong to the language families of their respective spoken languages. There are, for example, several such signed encodings of English.

There has been very little historical linguistic research on sign languages, and few attempts to determine genetic relationships between sign languages, other than simple comparison of lexical data and some discussion about whether certain sign languages are dialects of a language or languages of a family. Languages may be spread through migration, through the establishment of deaf schools (often by foreign-trained educators), or due to political domination.

Language contact is common, making clear family classifications difficult — it is often unclear whether lexical similarity is due to borrowing or a common parent language. Contact occurs between sign languages, between signed and spoken languages (Contact Sign), and between sign languages and gestural systems used by the broader community. One author has speculated that Adamorobe Sign Language may be related to the "gestural trade jargon used in the markets throughout West Africa", in vocabulary and areal features including prosody and phonetics.[11]

[The following are sign language "families" listed in Wikipedia article:]

• BSL, Auslan and NZSL are usually considered to belong to a language family known as BANZSL. Maritime Sign Language and South African Sign Language are also related to BSL.[12]

• Japanese Sign Language, Taiwanese Sign Language and Korean Sign Language are thought to be members of a Japanese Sign Language family.

• French Sign Language family. There are a number of sign languages that emerged from French Sign Language (LSF), or were the result of language contact between local community sign languages and LSF. These include: French Sign Language, Italian Sign Language, Quebec Sign Language, American Sign Language, Irish Sign Language, Russian Sign Language, Dutch Sign Language, Flemish Sign Language, Belgian-French Sign Language, Spanish Sign Language, Mexican Sign Language, Brazilian Sign Language (LIBRAS), Catalan Sign Language and others.

¤ A subset of this group includes languages that have been heavily influenced by American Sign Language (ASL), or are regional varieties of ASL. Bolivian Sign Language is sometimes considered a dialect of ASL. Thai Sign Language is a mixed language derived from ASL and the native sign languages of Bangkok and Chiang Mai, and may be considered part of the ASL family. Others possibly influenced by ASL include Ugandan Sign Language, Kenyan Sign Language, Philippine Sign Language and Malaysian Sign Language.

• Anecdotal evidence suggests that Finnish Sign Language, Swedish Sign Language and Norwegian Sign Language belong to a Scandinavian Sign Language family.

• Icelandic Sign Language is known to have originated from Danish Sign Language, although significant differences in vocabulary have developed in the course of a century of separate development.

• Israeli Sign Language was influenced by German Sign Language.

• According to a SIL report, the sign languages of Russia, Moldova and Ukraine share a high degree of lexical similarity and may be dialects of one language, or distinct related languages. The same report suggested a "cluster" of sign languages centered around Czech Sign Language, Hungarian Sign Language and Slovakian Sign Language. This group may also include Romanian, Bulgarian, and Polish sign languages.

• Known isolates include Nicaraguan Sign Language, Al-Sayyid Bedouin Sign Language, and Providence Island Sign Language.

• Sign languages of Jordan, Lebanon, Syria, Palestine, and Iraq (and possibly Saudi Arabia) may be part of a sprachbund, or may be one dialect of a larger Eastern Arabic Sign Language.

The only comprehensive classification along these lines going beyond a simple listing of languages dates back to 1991.[13] The classification is based on the 69 sign languages from the 1988 edition of Ethnologue that were known at the time of the 1989 conference on sign languages in Montreal and 11 more languages the author added after the conference.[14]

... ... ...

In his classification, the author distinguishes between primary and alternative sign languages[16] and, subcategorically, between languages recognizable as single languages and languages thought to be composite groups.[17] The prototype-A class of languages includes all those sign languages that seemingly cannot be derived from any other language. Prototype-R languages are languages that are remotely modelled on prototype-A language by a process Kroeber (1940) called "stimulus diffusion". The classes of BSL(bfi)-, DGS(gsg)-, JSL-, LSF(fsl)- and LSG-derived languages represent "new languages" derived from prototype languages by linguistic processes of creolization and relexification.[18] Creolization is seen as enriching overt morphology in "gesturally signed" languages, as compared to reducing overt morphology in "vocally signed" languages.[19]

Linguistic typology (going back on Edward Sapir) is based on word structure and distinguishes morphological classes such as agglutinating/concatenating, inflectional, polysynthetic, incorporating, and isolating ones.

Sign languages vary in syntactic typology as there are different word orders in different languages. For example, ÖGS [Austrian Sign Language] is Subject-Object-Verb [SOV] while ASL [American Sign Language] is Subject-Verb-Object [SVO]. Correspondence to the surrounding spoken languages is not improbable.

Morphologically speaking, wordshape is the essential factor. Canonical wordshape results from the systematic pairing of the binary values of two features, namely syllabicity (mono- or poly-) and morphemicity (mono- or poly-). Brentari[20][21] classifies sign languages as a whole group determined by the medium of communication (visual instead of auditive) as one group with the features monosyllabic and polymorphemic. That means, that via one syllable (i.e. one word, one sign) several morphemes can be expressed, like subject and object of a verb determine the direction of the verb's movement (inflection). This is necessary for sign languages to assure a comparable production rate to spoken languages, since producing one sign takes much longer than uttering one word - but on a sentence to sentence comparison, signed and spoken languages share approximately the same speed.[22]

Sign language differs from oral language in its relation to writing. The phonemic systems of oral languages are primarily sequential: that is, the majority of phonemes are produced in a sequence one after another, although many languages also have non-sequential aspects such as tone. As a consequence, traditional phonemic writing systems are also sequential, with at best diacritics for non-sequential aspects such as stress and tone.

Sign languages have a higher non-sequential component, with many "phonemes" produced simultaneously. For example, signs may involve fingers, hands, and face moving simultaneously, or the two hands moving in different directions. Traditional writing systems are not designed to deal with this level of complexity.

Partially because of this, sign languages are not often written. In those few countries with good educational opportunities available to the deaf, many deaf signers can read and write the oral language of their country at a level sufficient to consider them as "functionally literate." However, in many countries, deaf education is very poor and / or very limited. As a consequence, most deaf people have very little to no literacy in their country's spoken language.

However, there have been several attempts at developing scripts for sign languages. The Stokoe notation is a phonemic alphabet devised by William Stokoe for his 1965 Dictionary of American Sign Language. Designed specifically for ASL, it is limited in that it has no way of expressing facial expression. The more recent ASL-phabet is a minimal derivative of Stokoe along the lines of shorthand. The Hamburg Notation System (HamNoSys), on the other hand, is a detailed phonetic system that is not designed for any one sign language, and intended as a transcription system for researchers rather than as a practical script. SignWriting, a practical and by far the most popular system, can also be used for any sign language, and has adequate means of handling mouthing and facial expression. However, since it is iconic and not phonemic, there is no one-to-one correspondence with signs, which can often be written in multiple ways.

These systems are based on iconic symbols. Some, such as SignWriting and HamNoSys, are pictographic, being conventionalized pictures of the hands, face, and body; others, such as the Stokoe notation, are more iconic. Stokoe used letters of the Latin alphabet and Arabic numerals to indicate the handshapes used in fingerspelling, such as 'A' for a closed fist, 'B' for a flat hand, and '5' for a spread hand; but non-alphabetic symbols for location and movement, such as '[]' for the trunk of the body, '×' for contact, and '^' for an upward movement. David J. Peterson has attempted to create a phonetic transcription system for signing that is ASCII-friendly known as the Sign Language International Phonetic Alphabet (SLIPA).

SignWriting, being pictographic, is able to represent simultaneous elements in a single sign. The Stokoe notation, on the other hand, is sequential, with a conventionalized order of a symbol for the location of the sign, then one for the hand shape, and finally one (or more) for the movement. The orientation of the hand is indicated with an optional diacritic before the hand shape. When two movements occur simultaneously, they are written one atop the other; when sequential, they are written one after the other. Neither the Stokoe nor HamNoSys scripts are designed to represent facial expressions or non-manual movements, both of which SignWriting accommodates easily, although this is being gradually corrected in HamNoSys. HamNoSys, however, is a linguistic notational system rather than a practical script.

One

of the first demonstrations of the ability for telecommunications to help sign

language users communicate with each other occurred when

AT&T's

videophone

(trademarked as the "Picturephone") was introduced to the public at the

1964 New York World's Fair – two deaf users were able to freely communicate

with each other between the fair and another city.[23]

Various organizations have also conducted research on signing via videotelephony.

One

of the first demonstrations of the ability for telecommunications to help sign

language users communicate with each other occurred when

AT&T's

videophone

(trademarked as the "Picturephone") was introduced to the public at the

1964 New York World's Fair – two deaf users were able to freely communicate

with each other between the fair and another city.[23]

Various organizations have also conducted research on signing via videotelephony.

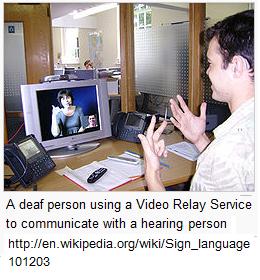

Sign

language interpretation services via

Video Remote Interpreting (VRI) or a

Video Relay Service (VRS) are useful in the present-day where one of the

parties is

deaf,

hard-of-hearing or

speech-impaired (mute) and the other is Hearing. In VRI, a sign-language

user and a Hearing person are in one location, and the interpreter is in another

(rather than being in the same room with the clients as would normally be the

case). The interpreter communicates with the sign-language user via a video

telecommunications link, and with the Hearing person by an audio link. In VRS,

the sign-language user, the interpreter, and the Hearing person are in three

separate locations, thus allowing the two clients to talk to each other on the

phone through the interpreter.

Sign

language interpretation services via

Video Remote Interpreting (VRI) or a

Video Relay Service (VRS) are useful in the present-day where one of the

parties is

deaf,

hard-of-hearing or

speech-impaired (mute) and the other is Hearing. In VRI, a sign-language

user and a Hearing person are in one location, and the interpreter is in another

(rather than being in the same room with the clients as would normally be the

case). The interpreter communicates with the sign-language user via a video

telecommunications link, and with the Hearing person by an audio link. In VRS,

the sign-language user, the interpreter, and the Hearing person are in three

separate locations, thus allowing the two clients to talk to each other on the

phone through the interpreter.

In such cases the interpretation flow is normally between a signed language and an oral language that are customarily used in the same country, such as French Sign Language (FSL) to spoken French, Spanish Sign Language (SSL) to spoken Spanish, British Sign Language (BSL) to spoken English, and American Sign Language (ASL) also to spoken English (since BSL and ASL are completely distinct), etc. Multilingual sign language interpreters, who can also translate as well across principal languages (such as to and from SSL, to and from spoken English), are also available, albeit less frequently. Such activities involve considerable effort on the part of the interpreter, since sign languages are distinct natural languages with their own construction and syntax, different from the oral language used in the same country.

With video interpreting, sign language interpreters work remotely with live video and audio feeds, so that the interpreter can see the deaf party, and converse with the hearing party, and vice versa. Much like telephone interpreting, video interpreting can be used for situations in which no on-site interpreters are available. However, video interpreting cannot be used for situations in which all parties are speaking via telephone alone. VRI and VRS interpretation requires all parties to have the necessary equipment. Some advanced equipment enables interpreters to remotely control the video camera, in order to zoom in and out or to point the camera toward the party that is signing.

Sign systems are sometimes developed within a single family. For instance, when hearing parents with no sign language skills have a deaf child, an informal system of signs will naturally develop, unless repressed by the parents. The term for these mini-languages is home sign (sometimes homesign or kitchen sign).[24]

Home sign arises due to the absence of any other way to communicate. Within the span of a single lifetime and without the support or feedback of a community, the child naturally invents signals to facilitate the meeting of his or her communication needs. Although this kind of system is grossly inadequate for the intellectual development of a child and it comes nowhere near meeting the standards linguists use to describe a complete language, it is a common occurrence. No type of Home Sign is recognized as an official language.

Gesture is a typical component of spoken languages. More elaborate systems of manual communication have developed in places or situations where speech is not practical or permitted, such as cloistered religious communities, scuba diving, television recording studios, loud workplaces, stock exchanges, baseball, hunting (by groups such as the Kalahari bushmen), or in the game Charades. In Rugby Union the Referee uses a limited but defined set of signs to communicate his/her decisions to the spectators. Recently, there has been a movement to teach and encourage the use of sign language with toddlers before they learn to talk, because such young children can communicate effectively with signed languages well before they are physically capable of speech. This is typically referred to as Baby Sign. There is also movement to use signed languages more with non-deaf and non-hard-of-hearing children with other causes of speech impairment or delay, for the obvious benefit of effective communication without dependence on speech.

On occasion, where the prevalence of deaf people is high enough, a deaf sign language has been taken up by an entire local community. Famous examples of this include Martha's Vineyard Sign Language in the USA, Kata Kolok in a village in Bali, Adamorobe Sign Language in Ghana and Yucatec Maya sign language in Mexico. In such communities deaf people are not socially disadvantaged.

Many Australian Aboriginal sign languages arose in a context of extensive speech taboos, such as during mourning and initiation rites. They are or were especially highly developed among the Warlpiri, Warumungu, Dieri, Kaytetye, Arrernte, and Warlmanpa, and are based on their respective spoken languages.

A pidgin sign language arose among tribes of American Indians in the Great Plains region of North America (see Plains Indian Sign Language). It was used to communicate among tribes with different spoken languages. There are especially users today among the Crow, Cheyenne, and Arapaho. Unlike other sign languages developed by hearing people, it shares the spatial grammar of deaf sign languages.

The gestural theory states that vocal human language developed from a gestural sign language.[25] An important question for gestural theory is what caused the shift to vocalization.[26]

There have been several notable examples of scientists teaching non-human

primates basic signs in order to communicate with humans.[27]

Notable examples are:

There have been several notable examples of scientists teaching non-human

primates basic signs in order to communicate with humans.[27]

Notable examples are:

• Chimpanzees: Washoe and Loulis

• Gorillas: Michael and Koko.

Insert from: http://en.wikipedia.org/wiki/Washoe 101204

Washoe (c. September 1965 – October 30, 2007) was a chimpanzee who was the first non-human to learn to use some of the signs of a human language, that of ASL. She also passed on some of her knowledge to her adopted son, Loulis.[2][3]

As part of a research experiment on animal language acquisition, Washoe developed an ability to communicate with humans using ASL. She was named for Washoe County, Nevada, where she was raised and taught to use ASL. Washoe had lived at Central Washington University since 1980; on October 30, 2007, officials from the Chimpanzee and Human Communication Institute on the CWU campus announced that she had died at the age of 42.

... previous attempts to teach chimpanzees to imitate vocal languages (the Gua and Vicki projects) had failed ... because chimps are physically unable to produce the voiced sounds required for spoken language. Their solution was to utilize the chimpanzee's ability to create diverse body gestures, which is how they communicate in the wild, by starting a language project based on ASL .

Deaf communities are very widespread in the world and the culture which comprises within them is very rich. Sometimes it even does not intersect with the culture of hearing because of different impediments for hard-of-hearing people to perceive audial information.

Some sign languages have obtained some form of legal recognition, while others have no status at all.

End of TIL file